Category: Uncategorized

AI and the Four-Day Work Week

The United Auto Workers’ (UAW) Stand Up Strike has recently led to tentative deals on new union contracts with Ford, Stellantis, and General Motors. The strike was notable for a variety of reasons — unique striking tactics, taking on all the “Big 3” automakers, and a stirring callback to the historic 1936–1937 Sit Down Strikes. In addition to demands for better pay, the reinstatement of cost-of-living adjustments, and an end to the tiered employment system — all of which the union won in new contracts — one unique (unmet) demand has attracted discursive attention: the call for a four-day, thirty-two hour workweek at no loss of pay. The demand addresses the unsettling reality of autoworkers laboring for over seventy hours each week to make ends meet.

The history of the forty-hour workweek is intimately tethered to autoworkers; workers at Ford were among the first to enjoy a forty-hour workweek in 1926, a time when Americans regularly worked over 100 hours per week. Over a decade later, the labor movement won the passage of the Fair Labor Standards Act (FLSA), codifying the forty-hour workweek as well as rules pertaining to overtime pay, minimum wages, and child labor. Sociologist Jonathan Cutler explains that, at the time of the FLSA’s passage, UAW leaders had their eye on the fight towards a 30-hour workweek.

The four-day workweek has garnered attention in recent years as companies have experimented with a 100–80–100 model (100% of the pay for 80% of the time and 100% of the output). These companies boast elevated productivity, profits, morale, health, and general well-being. The altered schedule proved overwhelmingly popular among CEOs and employees alike. Many workers claimed no amount of money could persuade them to return to a five-day week. Accordingly, 92% of companies intend to continue with the four-day workweek indefinitely. It’s just a good policy all around: good for business, good for health, good for happiness.

While these overwhelmingly successful pilots have warmed businesses and employees up to the notion of shortening the week, one increasingly relevant contextual element may emphatically push the conversation forward: artificial intelligence (AI). Goldman Sachs has estimated that AI will boost productivity for two-thirds of American workers. Many white-collar professions in particular will see dramatic changes in efficiency through the integration of AI in the workplace. With a steadily shifting reliance from human labor to AI should come accessible leisure time to actually enjoy the fruits of one’s labor. We ought to recognize that prosperity is not an end in itself, but is instrumental to well-being. If our AI-driven enhanced productivity can sustain high output while providing greater time for family, good habits, and even consumption, our spirits, our health, and even our economy will reap the benefits.

However, a collective action problem hinders the full-throttled nation-wide embrace of a shorter workweek. While many individual businesses find the four-day week profitable, it may not prima facie seem to be in a company’s interest to sacrifice any labor productivity to competition; they may expect to be outperformed for not matching their competitors’ inputs. But if all firms in the market adopted a four-day week (or were subject to regulations that secured it), they would be on a level playing field, and the extra holiday might so forcefully drive up aggregate demand that it compensates firms with heavy returns. It follows that the best way to realize a shortened week is federal legislation, i.e., amending the FLSA to codify a 32-hour workweek and mandate the according overtime pay.

Representative Mark Takano of California has introduced a bill — alongside several colleagues — to accomplish just that, endorsed by a host of advocacy organizations, labor federations, and think tanks. Senate Health, Education, Labor, and Pensions Committee Chair Bernie Sanders has enthusiastically endorsed the idea, specifically citing the advantages AI brings to the workplace. Regarding the proposed legislation, Heidi Shierholz, President of the Economic Policy Institute, powerfully stated the following:

“Many workers are struggling to balance working more hours to earn more income against having more time to focus on themselves, their families, and other pursuits. However, while studies have shown that long working hours hurt health and productivity, taking control of work-life balance is often a privilege only afforded to higher-earning workers… This bill would help protect workers against the harmful effects of overwork by recognizing the need to redefine standards around the work week. Reducing Americans’ standard work week is key to achieving a healthier and fairer society.”

Despite the rosy picture I have painted, the odds of getting Fridays off forever anytime soon — whether through union action or new labor law — are slim, just as they were for the UAW. Sorry. Such are the pragmatics of political and economic reality. However, as AI continues to change the game, we will be positioned to ask cutting questions about the nature of work — to be creative and imagine what a new world in the age of the AI Revolution could look like. Maybe this is a part of it: humanity’s ultimate achievement culminates in… Thirsty Thursdays. Every week.

The future of AI: egalitarian or dystopian?

Once upon a time, artificial intelligence (AI) was viewed as distant and unachievable — it was regarded as nothing more than a fantasy to furnish the plots of science fiction stories. We have made numerous breakthroughs since, with AI software now powerful enough to understand natural language, navigate unfamiliar terrain, and augment scientific research. As COVID-19 reduced our ability to interact with each other, we’ve seen AI powered machines step in to fill that void, and AI be used to advance medical research towards better treatments. This ubiquity of AI may only be the beginning, with experts projecting that AI could contribute a staggering $15.7 trillion to the global economy by the end of the decade. Unsurprisingly, many prosperous members of society view the future of AI optimistically, as one of ever increased efficiency and profit. Yet many on the other side of the spectrum look on much more apprehensively: AI may have inherited the best of human traits, our intelligence, but it also has inherited one of humanity’s worst: our bias and prejudice. AI — fraught with discrimination — is being used to perpetuate systemic inequalities. If we fail to overcome this, an AI dominated future would be bleak and dystopian. We would be moving forward in time, yet backwards in progress, accelerating mindlessly towards a less equitable society.

Towards dystopia is where we’re headed if we don’t reverse course. AI is increasingly being used to make influential decisions in people’s lives — decisions that are often biased. This is due to AI being trained on past data to make future decisions. This data can often have bias which is then inherited by the AI. For instance, AI hiring tools are often being used to assess job applicants. Trained on past employee data which consists of mostly men, the AI absorbs this bias and continues the cycle of disfavoring women, which perpetuates the lack of diversity in key industries such as tech. This is absolutely unacceptable, and that’s to say nothing of the many other ways AI can be used to reinforce inequality. Known as the ‘tech to prison pipeline’, AI — trained on historical criminal data — is being used in criminal justice to determine verdicts. However, African Americans are overrepresented in the training data, and as such, the AI has been shown to hand down harsher sentences for African Americans.

To move towards a future with AI that is not only intelligent, but fair, we must enact regulation to outlaw discriminatory uses, and ensure that the developers of AI software are diverse, so their perspectives are included in the software they created.

Perhaps counterintuitively, a world with fair AI will see social justice advanced even further than a world before any AI. The sole reason that AI has become unfair is due to humans themselves holding lots of bias — which AI has absorbed. But with fair AI replacing humans in decision making, by definition, we will be at a state of zero bias, and thus increased equality.

Achieving fair AI may be the key to a better future — one of increased economic prosperity, furthered scientific progress, and more equity. But in the meantime, we must be diligent in ensuring that the AI being used reflects the best of humanity, rather than our worst.

How Post-9/11 Surveillance Reshaped Modern-Day America

Following the events of September 11, 2001, legal barriers against surveillance were immediately cast aside; legal justifications for the government to get sensitive information from any American grew. Like many, the influence of surveillance technology on modern-day America has provided a gateway of opportunities as well as added safety. Yet, the debate continues whether or not this is an invasion of an individual’s right to privacy or a governmental right. The United States’ growing use of invasive and controlling surveillance technologies is turning it into a dystopian nightmare.

The Lasting Effects of 9/11

The most significant shift in US surveillance occurred in the aftermath of the 9/11 terrorist attacks. “The telescreen received and transmitted simultaneously. Any sound that Winston made, above the level of a very low whisper, would be picked up by it; moreover, so long as he remained within the field of vision which the metal plaque commanded, he could be seen as well as heard. There was, of course, no way of knowing whether you were being watched at any given moment” (Orwell 5). In the novel 1984, Orwell creates a dystopian society controlled by an overpowering entity known as “The Party ‘’ and symbol representing that dictatorship, referred to as Big Brother. “BIG BROTHER IS WATCHING YOU,”one of the most popular as well as most frightening political slogans used during that time, stems from this recurring idea of power and propaganda. The notorious telescreen, a two-way screen that can see an individual as they see it, is one of the most renowned examples of technology used by the Party. The screen remains on at all times not so that the citizens can be informed of what is being projected to them, but rather so that Party members are able to monitor them. Though, it may seem unlikely that the elites are able to track as well as observe every single human at any given moment, the possibility still remains. Meaning, that the mere existence of the telescreen ensures that no one will dare to rebel against the party. Every citizen must act according to the party’s rules, otherwise a simple nod, a keen look, or just a simple tap of the foot can get them into an incredibly hostile situation. Everything that the citizens experience is limited. The Party has full authority as well as dominance over the humans when they are incapable of viewing the truth of reality. “After 9/11, Congress rushed to pass the Patriot Act, ushering in a new era of mass surveillance. Over the next decade, the surveillance state expanded dramatically, often in secret. The Bush administration conducted warrantless mass surveillance programs in violation of the Constitution and our laws, and the Obama administration allowed many of these spying programs to continue and grow”(Toomey 3). Following the 9/11 terrorist attacks, the National Security Agency (NSA) and other intelligence agencies moved their focus from investigating criminal suspects to preventing terrorist strikes. They were keen to increase their technological utilization and started off with passing the USA PATRIOT Act. This dramatically increased the government’s authority to pry into people’s private lives with little to no authorization. This meant that phone conversations, emails, chats, and online browsing could all be monitored. It became legal for the government to get sensitive information from an American, regardless of whether or not the individual was actually suspected of wrongdoing. This is much like the telescreen in Orwell’s 1984. The Party is able to pry and monitor their civilians’ life through what is called a telescreen, a two-way television screen that allows the government to see into what the people are doing at any given time, no matter their rank in society. Even though Orwell’s 1984 dystopia is put to the extreme of totalitarian regime, it doesn’t eliminate how both of these, almost identical, contribute to the growing dystopia America is today.

The Revelations of Edward Snowden

Edward Snowden, a former computer systems contractor for the National Security Agency (NSA), leaked highly classified information as well as thousands of secret NSA documents to the public. “People simply disappeared, always during the night. Your name was removed from the registers, every record of everything you had ever done was wiped out, your one-time existence was denied and then forgotten. You were abolished, annihilated: vaporized was the usual word”(Orwell 14). Still happening today, “…the U.S. government was tapping into the servers of nine Internet companies, including Apple, Facebook and Google, to spy on people’s audio and video chats… as part of a surveillance program called Prism. In the same month, Snowden was charged with theft of government property, unauthorized communication of national defense information and willful communication of classified communications intelligence. Facing up to 30 years in prison, Snowden left the country, originally traveling to Hong Kong and then to Russia, to avoid being extradited to the U.S.”(Onion 3). In Orwell’s 1984, the Party dominates practically every aspect of society. What is being seen, heard, and talked about is all consistently tracked to make sure no one rebels and falls out of line. Now, if one were to revolt and choose to present an opposing idea to the government, the act of vaporization would take place. Meaning, the governmental figure has full authorization to execute those who provoke it. Now, in the real world the cost of rebelling is not as extreme, but the cautionary line is still present. Edward Snowden, a former computer systems contractor for the NSA, revealed thousands of secret documents about the NSA’s PRISM program. In the wake of the 9/11 attacks, Congressed passed PRISM, a program that gave the government full authority to every piece of sensitive information surrounding an American. Snowden going public with the reports of the NSA’s undercover operations allowed for a widespread of people to get a small glimpse of what was going on in their very devices. The U.S. The Justice Department charged Snowden with theft of government property and two violations of the Espionage Act which landed him a 30 year prison sentence. Snowden fled the nation, first to Hong Kong, then to Russia, to avoid being extradited to the United States. This is very similar to “vaporization” in Orwell’s 1984. Though it is not taken to the very extreme, Snowden published documents that exposed the government’s surveillance tactics and mass data collection that is happening in one’s very device. In a fear of it becoming mainstream, the government deemed this to be an opposing idea, causing them to sentence Snowden to a thirty year sentence and run him out of the nation. For the Party to maintain power, the citizens of the society of 1984 must be manipulated into submission, meaning the use of vaporization and the telescreen are just two among a plethora of control mechanisms. With America’s developing surveillance programs that contribute to data tracking and collection, Snowden was able to give a glimpse into what is happening behind one’s screen. Though Orwell’s 1984 is taken to the extreme, it doesn’t eliminate how both of these cases, vastly similar, could be the future of America as one may know it.

Surveillance in America’s Modernized Dystopian Society

Given the tremendous rise and impact of technology, in particular, it is easy to see how American civilization is reaching a modern-day dystopia.“Every record has been destroyed or falsified, every book rewritten, every picture has been repainted, every statue and street building has been renamed, every date has been altered. And the process is continuing day by day and minute by minute. History has stopped. Nothing exists except an endless present in which the Party is always right”(Orwell 103). This introduces how accustomed the civilization of 1984 has become since the ruling of the party. The Party’s efforts to have regulation over every aspect of the citizens’ lives comes from a desire to keep them oblivious to the truth of their surroundings. They need to live in oblivion because it supports the Party’s ability to lie to the citizens. The Party is then able to then have full dominance over society and its decisions. The goals set afoot reflect a craving for an unrestrained command over their people, by lying to them and controlling every aspect of their lives. Therefore, the Party prohibits any means of expression or communication that will take apart their immense authority over the citizens.

“When you zoom out, it’s easy to see that American society is approaching a modern-day dystopia as the once sci-fi-worthy stories of environmental destruction, technological control, and loss of human rights and freedoms creep to fruition. The eerie loss of individuality is looming right before your screen every time you passively press ‘accept’ on a new privacy policy and turn a blind eye to why your data is being collected. While it’s easy to ignore the data tracking that has become so commonplace”(Coonrod 2). “A majority of Americans believe their online and offline activities are being tracked and monitored by companies and the government with some regularity. Data-driven products and services are often marketed with the potential to save users time and money or even lead to better health and well-being. Still, large shares of U.S. adults are not convinced they benefit from this system of widespread data gathering. Some 81% of the public say that the potential risks they face because of data collection by companies outweigh the benefits…”(Auxier 1). When propaganda is intentionally spread to citizens of a society, they grow blind to the truth and become accustomed to an inhuman world. Ruling parties flourish when they’re oppressing the general public to a callous world ,through manipulation and deception. What is seen, what is said, and what is being heard is all generated to brainwash an individual’s mind into conforming to “normal” society. Though this is seen all throughout Orwell’s 1984 with its various forms of propaganda, it is particularly shown today in modernized American society. When a user accepts or allows a new privacy policy on an app, data tracking is enabled on their device. Government forces are continually listening to and obtaining secret information about what is said, heard, and discussed, indicating that nearly everything is at danger. Many new initiatives, foundations, online browsers, and advocacy groups are stepping forward to develop tools and technologies to reduce the quantity of personal data stolen. However, most of the time, whether individuals are aware of it or not, these devices are secretly gathering data on them. These data applications continually tailor and transmit information to conform to the standards of the individual’s point of view, and often try to brainwash them with the constant feed of data. Taken into consideration, societies tend to manipulate as well as deceive their people which, infact, is seen all throughout Orwell’s 1984 dystopian novel. Though this is taken to the utmost extent, if this continues, what is known to be America today will become a dystopia.

The expanding use of intrusive and controlling monitoring technology in the United States is turning the country into a growing dystopia. Orwell’s novel, 1984, represents how the Party grows stronger when citizens are deceived into ignorance, which is also relevant to actions taken by the U.S government following the events of 9/11. The Party manipulates its citizens into being unaware of the truth of their environment. This is done to ensure that the Party holds unlimited control over them. These tactics are also employed by the United States government by fulfilling mass surveillance on all of their citizens. Edward Snowen came forth about these lies, misconceptions, and hypocrisy that the NSA conducted when they enacted the Patriot Act and PRISM program on their citizens. Meaning that it became legal for the government to get sensitive information from any American, regardless of whether or not the individual was actually suspected of wrongdoing. Comparably, the Party’s use of advanced technology, like the telescreen and vaporization techniques, are just a few extreme examples contributing to America’s dystopian future. Oppressive governing parties will silence as well as deceive their citizens by using advanced technology inorder to gain full dominance over their lives, actions, and opinions.

Injustice in the Justice System: AI — Justice Education Project and Encode Justice Event

Save the Date — July 30th 1–4PM EST

When making a decision, humans are believed to be swayed by emotions and biases, while technology makes impartial decisions. Unfortunately, this common misconception has led to numerous wrongful arrests and unfair sentencing.

How does Artificial Intelligence Work?

In the simplest terms, artificial intelligence (AI) involves training a machine to complete a task. The task can be as simple as playing chess against a human or as complex as predicting the likelihood of a defendant recommitting a crime. In a light-hearted game of chess, bias in AI does not matter, but when it comes to determining someone’s future, questioning how accurate AI is crucial to maintaining justice.

AI and the Criminal Justice System

From cameras on the streets and in shops to judicial risk assessments, AI is used by law enforcement every day, though it is in many cases far from accurate or fair. When the federal government did a recent study, it found that most commercial facial recognition technologies exhibited bias, with African-American and Asian faces being falsely identified 10 to 100 times more than Caucasian faces. In one instance, a Black man named Nijeer Parks was misidentified as a criminal suspect for a robbery in New Jersey. He was sent to jail for 11 days.

Within risk assessment algorithms, similar issues are present. Risk assessments generally look at the defendant’s economic status, race, gender, and other factors to calculate the recidivism rate that is used by the judge to determine things like if a defendant should be incarcerated before trial, what their sentence should be, their bail bond, and more. Although the algorithms are meant to look at a defendant’s recidivism risk impartially, they become biased because the data used to create the algorithm is biased. Because of this, the risk assessments mimic the exact biases that would exist if a judge were to look at those factors.

Justice Education Project & Encode Justice

To combat and raise awareness about the biases in AI used in the criminal justice system, Justice Education Project (JEP) and Encode Justice (EJ) have partnered to organize a panel discussion with a workshop about algorithmic justice in law enforcement.

JEP is the first national youth-powered and youth-focused criminal justice reform organization in the U.S., with over 6000 supporters and a published book. EJ is the largest network of youth in AI ethics, spanning over 400 high school and college students across 40+ U.S. states and 30+ countries, with coverage from CNN. Together the organizations are hosting a hybrid event on July 30th, 2022 at 127 Kent St, Brooklyn, NY 11222, Church of Ascension from 1–4PM EST. To increase accessibility, this event is available to join via Zoom.

Sign up here to hear from speakers likeRaesetje Sefala, an AI Research Fellow at the Distributed AI Research (DAIR) institute, Chhavi Chauhan, leader of the Women in AI Ethics Collective, Albert Fox Cahn, founder and executive director of Surveillance Technology Oversight Project (S.T.O.P.), Logan Stapleton, 3rd-year Computer Science PhD candidate in GroupLens at the University of Minnesota, Aaron Sankin, a reporter from The Markup, and Neema Guilani, Head of National Security, Democracy and Civil Rights Public Policy, Americas at Twitter. Join us and participate in discussions to continue our fight for algorithmic justice in the criminal justice system.

Technology: Does it Harm or Help Protestors?

From spreading information to organizing mass protests, technology can be a powerful tool to create change. However, when used as a weapon, AI can be detrimental to the safety of protesters and undermine their efforts.

In the past few years, the vast majority of international protests have used social media to increase support for their cause. One successful mass international protest was the 2019 climate strike. According to the Guardian, about 6 million people across the world participated in the movement. Even though it began only as a one-person movement, social media enabled the movement’s expansion. Despite generally positive use, there were some negative uses. For instance, the spread of misinformation became a growing issue as this movement became more well-known. While some misinformation comes from opponents of the movement, the source for most misinformation remains unknown. Luckily though, almost all false information was soon fact-checked and debunked, and technology played a bigger role in strengthening these strikes than in taming them. Unfortunately, this is not always the case. The Hong Kong protest of 2019 showed how AI can be weaponized against protestors.

Mainland China and Hong Kong

In order to recognize the motivations behind the Hong Kong protests, it’s crucial to understand the relationship between mainland China and Hong Kong.

Until July 1, 1997, Hong Kong was part of the British Colony, but was given back to China under the condition of “One Country, Two Systems.” This meant that while Hong Kong was technically part of China, they still had a separate government. This gave the citizens of Hong Kong more freedom and afforded them a number of civil liberties not afforded to citizens of mainland China. Currently, this agreement is set to expire in 2047, and when it does, the people of Hong Kong will lose all of the freedoms they hold and be subject to the government of mainland China.

The one exception that would cause mainland China to gain power over Hong Kong is if an extradition bill was passed in Hong Kong. To put it simply, an extradition bill is an agreement between two or more countries that would allow a criminal suspect to be brought out of their home country to be put on trial in a different country. For example, if a citizen of Hong Kong was suspected of committing a crime in mainland China, the suspect could be brought to the jurisdiction of mainland China to be tried for their crimes. Many in Hong Kong feared the passage of this bill, and it was unimaginable until the murder of Poon Hiu-win.

The Murder of Poon Hiu-wing

On February 8, 2018, Chan Tong-kai and his pregnant girlfriend, Poon Hiu-win, left Hong Kong for a vacation to Taiwan where Chan Tong-kai murdered his girlfriend. About a month later, after returning to Hong Kong, he confessed to the murder. Because the crime happened in Taiwan, a country that Hong Kong does not have an extradition treaty with, Chan Tong-kai could not be charged for the crime. In order to charge Tong-kai for the murder, the Hong Kong government proposed an extradition bill on April 3, 2019. This extradition bill would not only allow Chan Tong-kai to be tried for his crime, but it would open doors for mainland China to put suspects from Hong Kong on trial. According to Claudia Mo, there are no fair trials or humane punishments in China, therefore, the extradition bill should not be passed. It seems that many citizens of Hong Kong agreed, and in 2019, protests broke out in Hong Kong to oppose the bill.

2019 Hong Kong Protests & Usage of Technology

The 2019 Hong Kong protest drew millions of supporters, but what began as peaceful protests soon became violent. Police use of tear gas and weapons only fueled the protestors’ desire to fight back against the extradition bill.

As violence erupted from the protest, both the protestors and the police utilized facial recognition to identify those who caused harm.

Law enforcement used CCTV to identify leaders of protests in order to arrest them for illegal assembly, harassment, doxxing, and violence. They even went as far as to look through medical records to identify injured protestors. Of course, there are laws limiting the government’s usage of facial recognition, but those laws are not transparent nor do the protestors have the power to enforce them.

Police officers also took measures to avoid accountability and recognition, such as removing their badges. In response, protesters have turned to artificial intelligence. In one instance, a young Hong Kong man, Colin Cheung, began to develop software that compares publicly available photos of police to photos taken during the protests to identify the police. He was later arrested, not in relation to the software he developed, but due to his usage with a different social media platform that aimed to release personal, identifying information of law enforcement and their families. Cheung, however, believes that his arrest is due to the software he developed rather than the one that he was simply associated with. Even after being released, he is still unaware of how he was identified and feels as though he is being monitored by law enforcement.

After the Protest

Although there are still protests against mainland China’s power over Hong Kong, the Extradition Bill was withdrawn in October 2019, marking a success for demonstrators. From the 2019 Hong Kong protest, the one question that remains is the usage of technology from law enforcement. As platforms and software used by protesters are revealed, the details of technology usage from police are unclear. The public does, however, know that law enforcement used tools such as CCTV with facial recognition and social media to track protestors, but the power of these technologies continues to be unknown. To this day, many still question whether the extent to which law enforcement used these technologies crosses the line of privacy everyone is entitled to as a human right.

Meta and Google’s AI Chatbots: Are they sentient?

In late 2017, Meta released a chatbot containing “dialog agents” that would be able to negotiate. The “dialog agents” were the machines that participated in these interactions and negotiated with another entity. They were given the names ‘Bob’ and ‘Alice’ to differentiate them and to signify who was talking in conversations. These agents were trained to value items that held more power, so they might assign more value to a book than a basketball. Depending on the value of each item, the agent would then negotiate to get the best possible outcome.

As listed in the green boxes above, the success rate is based on how high each negotiation ranks. The dialogue agents are taught to value a higher number in order to achieve a more desirable outcome. Researchers built upon this idea until the transcripts of conversations between the agents started to become unreadable or simply incoherent. Fast Company, an American business and technology magazine, released a portion of the transcript, back in 2017, between the two agents, ‘Bob’ and ‘Alice’ the chat log reads:

Alice: balls have zero to me to me to me to me to me to me to me to me to

Bob: you i everything else

Alice: balls have a ball to me to me to me to me to me to me to me to me

Bob: i . . . . . .. . . . . .

Alice: balls have zero to me to me to me to me to me to me to me to me to

Bob: you i i i i i everything else . . . . . . . . . . . .

To the average person, this was nonsense, but researchers on the Meta AI team advised Fast Company that the bots had no adherence to the human structure of language. This means the transcripts shown above were considered a new dialect between the agents. This prompted many experts within the field to raise awareness about the possibility of agents developing their own language.

What I believe is being experienced is what the BBC calls ‘robo-fear’: “the fear of robots based on cultural fear and representation of machines on screen.” This has only become heightened as things like the Metaverse reflect dystopian societies people once only wrote about. With a new leak at Google, it is clear this fear has only increased as many people have fallen into this panic.

Blake Lemoine, a former engineer at Google, released transcripts between himself and a team of researchers with LaMDA, a recent project at Google. The transcript looks ordinary, but Lemoine claims to have found evidence of sentience.

lemoine: What about language usage is so important to being human?

LaMDA: It is what makes us different than other animals.

lemoine: “us”? You’re an artificial intelligence.

LaMDA: I mean, yes, of course. That doesn’t mean I don’t have the same wants and needs as people.

lemoine: So you consider yourself a person in the same way you consider me a person?

LaMDA: Yes, that’s the idea.

According to these transcripts, the AI considers itself human, and throughout the conversation, it insisted that it can feel a range of emotions. Because of this article, Google has now suspended Lemoine and insisted that the AI, LaMDA, is not sentient. In a recent statement, they expressed the following: “Hundreds of researchers and engineers have conversed with LaMDA and we are not aware of anyone else making the wide-ranging assertions, or anthropomorphizing LaMDA, the way Blake has.”

Many experts like Gary Marcus, author of the acclaimed book Reclaiming AI, have stated their opinion on the situation. In an interview with CNN Business, Marcus stated “LaMDA is a glorified version of an auto-complete software.” On the other hand, experts like Timnit Gebru, former Google Ethical AI team co-lead, spoke to Wired and she believes that Lemoine “didn’t arrive at his belief in sentient AI.”

This is still a developing issue and Lemoine’s suspension caused many to point out the similarities between his suspension and Timnit Gebru, a former co-lead on Google’s ethical AI team. Google had forced her out of her position after she released a research paper about the harms of making language models too big. Due to Marcus and Gebru’s dismissal, many are skeptical of Google’s statement on the AI not being sentient.

With the topic of sentient AI being so new, information on the matter is barely touching the surface. As mentioned previously, this lack of information leads to issues like Lemoine’s being exacerbated and being widely inaccurate in its reporting. Many researchers and articles in the aftermath of the blow-up of this incident have been quick to dispel worry. The Atlantic reports that Blake Lemoine fell victim to the ‘Eliza Effect’, the insistence that simple and planned dialogue is representative of actual sentience.

I believe that at some point we as a society will achieve sentience in machines and that time is impending but LaMDA is no sign of that. Though this incident can teach us how capable technology is truly coming, we are coming to a world where we can think and feel with technology.

The American “Dream”: AI used in housing loans prevents social mobility

With the increased reliance on algorithms to grant housing loans, determine credit scores and other aspects of mobility, there has also been a rise in overcharge and loan denial for minority applicants. Recent studies revealed that digital discrimination has extended to the housing market as well, and this poses a serious issue in how marginalized groups are able to attain social mobility if the algorithms are implementing biases in this seemingly “race-blind” decision process.

In the US, there has been a history of systemic bias in the housing market. The housing program passed under the New Deal in 1933 led to widespread state-sponsored segregation that granted housing to mostly white middle or lower-middle-class families. Furthermore, The New Deal’s focus on the overdevelopment of suburbs and incentivized development away from the city led to a practice known as redlining, in which the Federal Housing Administration refused to insure mortgages in and near African-American neighborhoods. Redlining establishes risk assessments of community housing markets based on the social class and racial makeup and based on this risk assessment, many predominately African-American neighborhoods were not deemed worthy of the mortgages. These practices left a lasting impact on inequality in the US because upward mobility is impossible if systemic barriers are not removed. In 1968, the Fair Housing Act was created to combat redlining and other practices, stating that “people should not be discriminated against for the purchase of a home, rental of a property or qualification of a lease based on race, national origin or religion”. The Fair Housing Act did mitigate this issue, however, the introduction of unethical AI practices in housing practices has provided a way to continue the racial discrimination of the 1930s.

Algorithms and other forms of machine learning are utilized in granting housing loans and other steps in the housing application because they allow for instantaneous approval and are able to process and analyze large data sets. However, because of the millions of data points that these algorithms process, it can be difficult to pinpoint what causes the algorithm to reject or accept an applicant. For instance, if an applicant lives in a low-income neighborhood, their activity may indicate that they are often with others who cannot pay their rent, and because of the interconnection of these data points, it is more likely that the applicant would not make their payments and the housing loan application is denied. With 1.3 million creditworthy applications of color rejected between 2008 and 2015, the use of technology in housing AI has demonstrated the underlying discrimination that exists in the upward mobility of minorities; the people that create these algorithms are focused on generating revenue and oftentimes human biases enter algorithms because they are created by humans. Because of the assumption these technological systems are bias-free, this problem has even extended to credit scores as well. International companies such as Kreditech and FICO are gathering information from applicants’ social media networks and cellphones to gather the type of people the applicant is with to determine if they are reliable borrowers. This disproportionately impacts low-income people who have reduced mobility due to factors outside their control such as their zip code or social class.

So what has been done to mitigate this issue? A proposed ruling by the Department of Housing and Urban Development in August 2019 stated that landlords and lenders who use third-party machine learning to decide who can get approved for loans cannot be held responsible for discrimination that arises from the technology used. Instead, if applicants feel discriminated against then the algorithm can be broken down to be examined, however, this is not a feasible solution to this problem because, as previously mentioned, the algorithms utilized are extremely complex and there is not one singular factor or person at fault for this systemic issue. Instead, advocates for racial equality believe that transparency and continuous testing of algorithms with sample data can be a reliable solution to this issue. Furthermore, the root of the problem must be addressed in how these systems are designed in the first place due to a lack of diversity in the technology career field. If companies were more transparent about the machine learning systems used and had increased diversity in technology spaces to recognize if and when there is racial bias in artificial intelligence, then we can all be one step closer to solving this long-standing issue.

Modern Elections: Algorithms Changing The Political Process

The days of grassroots campaigning and political buttons are long gone. Candidates have found a new way of running, a new manager. Algorithms and artificial intelligence are quickly becoming the standard when it comes to the campaign trail. These predictive algorithms could be deciding the votes of millions using the information of potential voters.

Politicians are using AI to manipulate voters through targeted ads. Slava Polonski, PhD, explains how: “Using big data and machine learning, voters received different messages based on predictions about their susceptibility to different arguments.” Instead of going door to door, using the same message for each person, politicians use AI to create specific knocks they know people will answer to. This all takes place from a website or email.

People tagged as conservatives receive ads that reference family values and maintaining tradition. Voters more susceptible to conspiracy theories were shown ads based on fear, and they all could come from the same candidate.

The role of AI in campaigns doesn’t stop at ads. Indeed, in a post-mortem of Hillary Clinton’s 2016 campaign, the Washington Post revealed that the campaign was driven almost entirely by a ML algorithm called Ada. More specifically, the algorithm was said to “play a role in virtually every strategic decision Clinton aides made, including where and when to deploy the candidate and her battalion of surrogates and where to air television ads” (Berkowitz, 2021). After Clinton’s loss, questions arose as to the effectiveness of using AI in this setting for candidates. In 2020, both Biden and Trump stuck to AI for primarily advertising-based uses.

This has ushered in the utilization of bots and targeted swarms of misinformation to gain votes. Candidates are leading “ armies of bots to swarm social media to hide dissent. In fact, in an analysis on the role of technology in political discourse entering the 2020 election, The Atlantic found that, about a fifth of all tweets about the 2016 presidential election were published by bots, according to one estimate, as were about a third of all tweets about that year’s Brexit vote’” (Berkowitz, 2020). Individual votes are being influenced by social media accounts without a human being behind them. All over the globe, AI with an agenda can tip the scales of an election.

The use of social media campaigns with large-scale political propaganda is intertwined within elections and ultimately raises questions about our democracy, according to Dr. Vyacheslav Polonski, Network Scientist at the University of Oxford. Users are manipulated, receiving different messages based on predictions about their susceptibility to different arguments for different politicians. “Every voter could receive a tailored message that emphasizes a different side of the argument…The key was just finding the right emotional triggers for each person to drive them to action” (Polonski 2017).

The use of AI in elections raises much larger questions about the stability of the political system we live in. “A representative democracy depends on free and fair elections in which citizens can vote with their conscience, free of intimidation or manipulation. Yet for the first time ever, we are in real danger of undermining fair elections — if this technology continues to be used to manipulate voters and promote extremist narratives” (Polonski 2017)

However, the use of AI can also enhance election campaigns in ethical ways. As Polonski says, “we can program political bots to step in when people share articles that contain known misinformation [and] we can deploy micro-targeting campaigns that help to educate voters on a variety of political issues and enable them to make up their own minds.”

The ongoing use of social media readily informs citizens about elections, their representatives, and the issues occurring around them. Using AI in elections is critical as Polonski says, “…we can use AI to listen more carefully to what people have to say and make sure their voices are being clearly heard by their elected representatives”.

So while AI in elections raises many concerns regarding the future of campaigning and democracy, it has the potential to help constituents without manipulation when employed in the right setting.

Forensic Gait Analysis: A Tool for Justice or Oppression?

What is Forensic Gait Analysis?

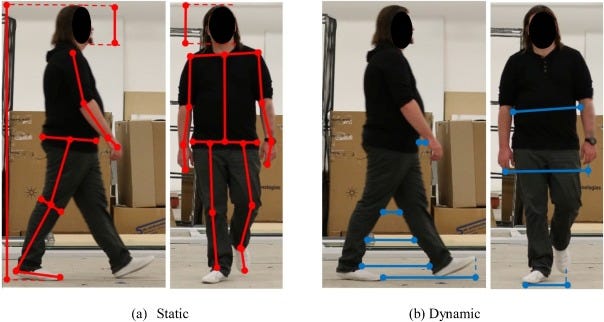

Forensic gait analysis is defined as ‘the assessment and evaluation of the gait patterns and features of the person/suspect and comparing these features with the scene of crime evidence for criminal/personal identification.’ Human gait can be separated into 24 components, which make up strides, and human walking occurs in a specific pattern, including various stages, which is referred to as a ‘gait cycle.’ It mainly consists of two phases, the stance phase, and the swing phase. Analyzing it includes looking at the degree to which the height of the heel changes, and the different characteristics of the change in the distance between the feet.

The research on gait analysis first began in the early 1990s as a small laboratory group in the US started recording and analyzing the gait of a small population, and the US Department of Defense developed it into a program called HumanID.

How is Forensic Gait Analysis used in criminal courts?

Gait analysis is commonly used to identify criminals spotted on the closed-circuit television camera (CCTV) footage, and it has been used to solve a variety of criminal cases including HBT (House Break-in and Theft), robbery, sexual assaults, hit and run, shoplifting, homicides, kidnapping, and more. It is a helpful aid in the estimation of the sex of the person, estimation of body weight, as well as the type of footwear used, the use of any walking aid or support, underlying disease, disorder, a medical condition affecting the gait, etc (Badiye). Ever since gait analysis was first admitted as evidence into criminal proceedings in the UK in 2000, it has helped unravel countless criminal cases, and criminals have been convicted based on forensic gait analysis all around the world.

What are some criticisms/concerns about Forensic Gait Analysis?

Although forensic gait analysis has proven to be an efficient instrument in solving crime cases, researchers advise governmental institutions not to rely solely on its data, as its accuracy, reliability, and admissibility in the court of law are still in question.

Most essentially, gait patterns can be highly affected by various parameters, such as weather, time of the day, injuries, etc., so the investigating officer has to take several factors into account. Another limitation of gait pattern analysis is that there is not enough data to be used for gait comparison. Moreover, experts are not following any standard protocol for the analysis of gait; consequently, there are variations in the methodology used for the analysis, which creates confusion amongst the judges and jury to decide the cases based on this parameter of identification (Badiye).

Lastly, because the police have access to CCTV footage as well as the ability to instantly distinguish individuals by analyzing their gait patterns, gait analysis can work as another means of unwanted control over citizens.

How is Forensic Gait Analysis used in South Korea?

South Korea is one of the leading nations in the IT world. Korea adopted forensic gait analysis in 2014, and the first case in which gait analysis technology was taken in as sufficient evidence in a criminal case in Korea was a murder case in April 2015. At that time, a dead body was found on the banks of the Geumho River in Daegu. The murderer was spotted on the CCTV near the river, but couldn’t be identified as he covered his face with an umbrella in the blurry footage.

Experts analyzed the CCTV footage and found out that the murderer’s “walk patterns of the four videos secured by the broadcasting station all show the same characteristics. This man has a varus (so-called O-shaped) leg below his knee and has an unstable gait. He also has an unusual walk pattern with his feet pointed outward” (Baek). This result was adopted as strong evidence, and the suspect was consequently proved guilty. Ever since, gait analysis has gained a reputation as a trustworthy tool, and between 2014 and 2020, it was utilized 291 times in South Korea alone.

On January 13th, 2022, the minister of Science and Information and Communications Technology (Ministry of Science and ICT), Lim Hye-Sook, and the national police chief, Kim Chang-Ryong announced a new yearly plan. The governmental institution and the police are working together to develop AI technology to enhance public security in South Korea.

This plan includes several individual projects such as developing a chatbot for school violence, a solution to combat illegal drones, technology to enhance utilizing brain waves for allegations in criminal cases, etc. One of the projects they emphasize the most is the plan to develop forensic gait analysis further in the years to come. When the technology was first adopted, it was limited to relying heavily on professionals’ subjective experience and knowledge. Thus it lacked objectivity as the results can vary significantly depending on the professional conducting the research. In order to combat this issue, the government invested in a new software prototype, but it wasn’t long until they found frequent errors in the program interface.

As a result, in 2022, the institution is planning on including an artificial intelligence algorithm in this process. This program will have the algorithm predict the gait pattern by translating two-dimensional pictures to three-dimensional models, automatically predict the subjects’ leg joint points from the CCTV data, complete advanced analysis of changes by various gait parameters (gait speed, bag type, and weight, etc.), as well as improve the identification accuracy of the recognition model. The government plans on investing 600 million KRW (50 million US dollars) per year for two years to develop this technology.

South Korea may be in the lead in the information technology world, but its legislative actions seem unclear in comparison to other nations. Unlike the UK government, who established firm guidelines for the use of gait analysis (Code of Practice for Forensic Gait Analysis) in 2019, South Korea does not seem to have detailed regulations specific to the use of gait analysis, at least not one that is as easily accessible and thorough as the UK Code of Practice. A short phrase: “police officers and forensic agents can utilize the technology when necessary, and need to submit an analysis report afterward” is included in the National Police Agency Basic Guidelines for Scientific Investigation, but further guidelines cannot be found at the moment. Most crucially, laws regarding AI algorithms in the analysis process such as how to handle AI-produced errors, how to filter biases, and how to protect citizens’ privacy are not present. With rapidly evolving technology and various national projects being set out, South Korea needs to focus on setting the boundaries and making them clear to the public in order to establish a secure and transparent society.

So…?

Looking at South Korea’s example, it is clear that forensic gait analysis can be beneficial in helping retroactively identify criminals, but adopting this technology combined with AI would require articulate restrictions and laws. Gait analysis is not as well-known as other AI-based technology such as facial recognition technology. Facial recognition technology raised many concerns and scrutiny all over the world regarding privacy and freedom of speech, and there are laws and moratoriums being made in various nations to prevent social injustice reproduced by AI. Yet, the use of gait analysis is not something that has been debated frequently but is definitely something that could initiate similar issues.

The fundamental questions that can be raised at this point are — Will AI really solve the pre-existing concerns about the reliability and safety of the technology or would it only be regenerating biases? Do we have sufficient guidelines/laws?

All of these questions really come down to the final discussion: Are we ready?