The United Auto Workers’ (UAW) Stand Up Strike has recently led to tentative deals on new union contracts with Ford, Stellantis, and General Motors. The strike was notable for a variety of reasons — unique striking tactics, taking on all the “Big 3” automakers, and a stirring callback to the historic 1936–1937 Sit Down Strikes. In addition to demands for better pay, the reinstatement of cost-of-living adjustments, and an end to the tiered employment system — all of which the union won in new contracts — one unique (unmet) demand has attracted discursive attention: the call for a four-day, thirty-two hour workweek at no loss of pay. The demand addresses the unsettling reality of autoworkers laboring for over seventy hours each week to make ends meet.

The history of the forty-hour workweek is intimately tethered to autoworkers; workers at Ford were among the first to enjoy a forty-hour workweek in 1926, a time when Americans regularly worked over 100 hours per week. Over a decade later, the labor movement won the passage of the Fair Labor Standards Act (FLSA), codifying the forty-hour workweek as well as rules pertaining to overtime pay, minimum wages, and child labor. Sociologist Jonathan Cutler explains that, at the time of the FLSA’s passage, UAW leaders had their eye on the fight towards a 30-hour workweek.

The four-day workweek has garnered attention in recent years as companies have experimented with a 100–80–100 model (100% of the pay for 80% of the time and 100% of the output). These companies boast elevated productivity, profits, morale, health, and general well-being. The altered schedule proved overwhelmingly popular among CEOs and employees alike. Many workers claimed no amount of money could persuade them to return to a five-day week. Accordingly, 92% of companies intend to continue with the four-day workweek indefinitely. It’s just a good policy all around: good for business, good for health, good for happiness.

While these overwhelmingly successful pilots have warmed businesses and employees up to the notion of shortening the week, one increasingly relevant contextual element may emphatically push the conversation forward: artificial intelligence (AI). Goldman Sachs has estimated that AI will boost productivity for two-thirds of American workers. Many white-collar professions in particular will see dramatic changes in efficiency through the integration of AI in the workplace. With a steadily shifting reliance from human labor to AI should come accessible leisure time to actually enjoy the fruits of one’s labor. We ought to recognize that prosperity is not an end in itself, but is instrumental to well-being. If our AI-driven enhanced productivity can sustain high output while providing greater time for family, good habits, and even consumption, our spirits, our health, and even our economy will reap the benefits.

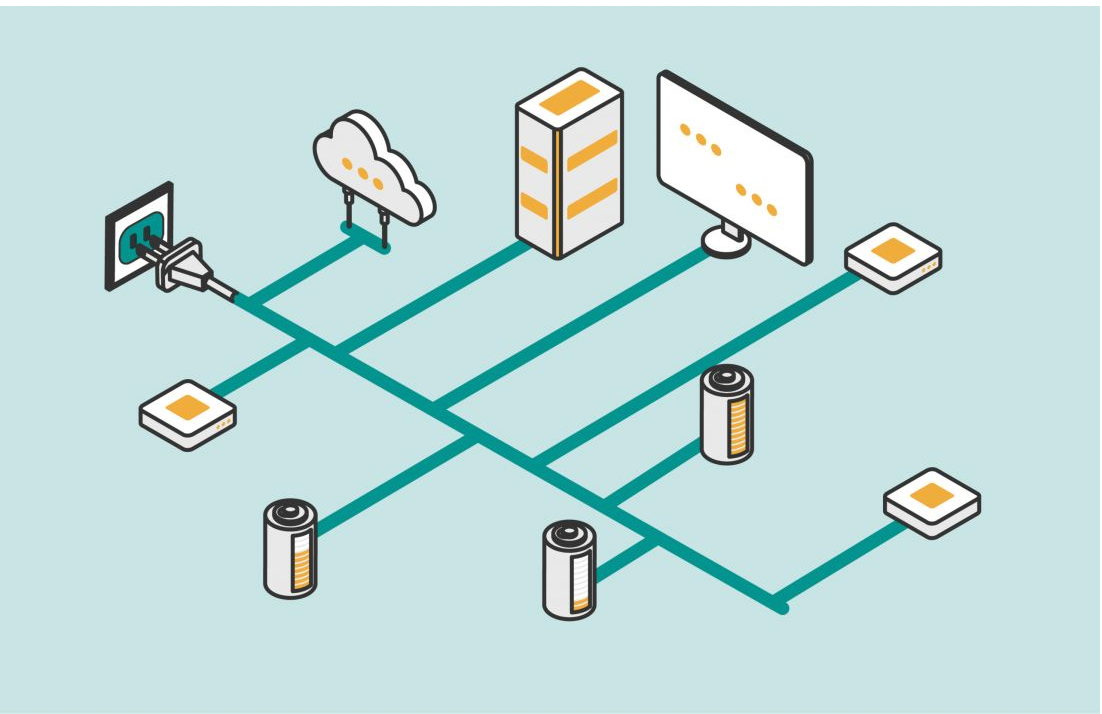

However, a collective action problem hinders the full-throttled nation-wide embrace of a shorter workweek. While many individual businesses find the four-day week profitable, it may not prima facie seem to be in a company’s interest to sacrifice any labor productivity to competition; they may expect to be outperformed for not matching their competitors’ inputs. But if all firms in the market adopted a four-day week (or were subject to regulations that secured it), they would be on a level playing field, and the extra holiday might so forcefully drive up aggregate demand that it compensates firms with heavy returns. It follows that the best way to realize a shortened week is federal legislation, i.e., amending the FLSA to codify a 32-hour workweek and mandate the according overtime pay.

Representative Mark Takano of California has introduced a bill — alongside several colleagues — to accomplish just that, endorsed by a host of advocacy organizations, labor federations, and think tanks. Senate Health, Education, Labor, and Pensions Committee Chair Bernie Sanders has enthusiastically endorsed the idea, specifically citing the advantages AI brings to the workplace. Regarding the proposed legislation, Heidi Shierholz, President of the Economic Policy Institute, powerfully stated the following:

“Many workers are struggling to balance working more hours to earn more income against having more time to focus on themselves, their families, and other pursuits. However, while studies have shown that long working hours hurt health and productivity, taking control of work-life balance is often a privilege only afforded to higher-earning workers… This bill would help protect workers against the harmful effects of overwork by recognizing the need to redefine standards around the work week. Reducing Americans’ standard work week is key to achieving a healthier and fairer society.”

Despite the rosy picture I have painted, the odds of getting Fridays off forever anytime soon — whether through union action or new labor law — are slim, just as they were for the UAW. Sorry. Such are the pragmatics of political and economic reality. However, as AI continues to change the game, we will be positioned to ask cutting questions about the nature of work — to be creative and imagine what a new world in the age of the AI Revolution could look like. Maybe this is a part of it: humanity’s ultimate achievement culminates in… Thirsty Thursdays. Every week.