In recent news, NFTs, or non-fungible tokens, have garnered attention for their environmental impact. NFTs are digital assets that represent something in the real world like an art piece. This has now brought attention to the environmental impact of things on the internet and technology in general. As of late, AI models have been shown to use up vast amounts of energy in order to train itself for it’s express purpose.

How much energy is being used?

During the training of the AI model, a team of researchers led by Emma Strubel at the University of Massachusetts Amherst noticed that the AI model they were training used exorbitant amounts of energy. For an AI model to work for its intended purpose, the model has to be trained through various tests depending on the type of model it is and its purpose. In this situation, the team of researchers calculated that the AI model they were trained used thirty-nine pounds of carbon dioxide before the model was fully trained for its purpose.

This is similar to the emissions that a car releases in its lifetime five times over. The University of Massachusetts Amherst concluded that the training of just one neural network accounts for “roughly 626,000 pounds of carbon dioxide” released into the atmosphere.

This AI model is one out of hundreds that are releasing mass amounts of emissions that harm the environment. AI models have been used in medicine with chatbots being used to identify symptoms in patients along with a learning algorithm, created by researchers at Google AI Healthcare, being trained to identify breast cancer. However, in the coming years the environmental effects may counteract the good the model is trying to do.

What is the real harm? And how does this happen?

This energy usage with thousands of AI models coupled with the Earth’s already rising climate crisis may cause our emissions to reach an all-time high. With 2020 as one of the hottest years on record, the emissions from these models only add to the problem of climate change. Especially with the growth of the tech field, it is alarming to see the high emission rates of algorithmic technology.

The demand for AI to solve multitudes of problems will continue to grow and with this comes more and more data. Strubel concludes that “training huge models on tons of data is not feasible for academics.” This is due to the lack of advanced computers that are better suited to process these mass amounts of data. With these advanced computers, it would help to synthesize and process the information with generally less carbon output, according to Strubel and her team.

She goes on to say that as time goes on it becomes less feasible for researchers and students to be able to process mass amounts of data without these computers. Often in order to make groundbreaking studies, it comes at the cost of the environment, which is why advanced computers are necessary in order to be able to continue to make progress in the field of AI.

What are the solutions?

Currently, the best solution as proposed by the researcher is to invest in faster and more efficient computers to process these mass amounts of information. Funding for this type of research would help the computers process these mass amounts of data. It would cut down on the energy usage of these computers and lessen the environmental impact of training the AI models.

Credits to ofa.mit.edu

At MIT, the researchers there were able to cut down on their energy usage by utilizing the computers donated to them by IBM. Because of these computers, the researchers were able to process millions of calculations and write important papers in the AI field.

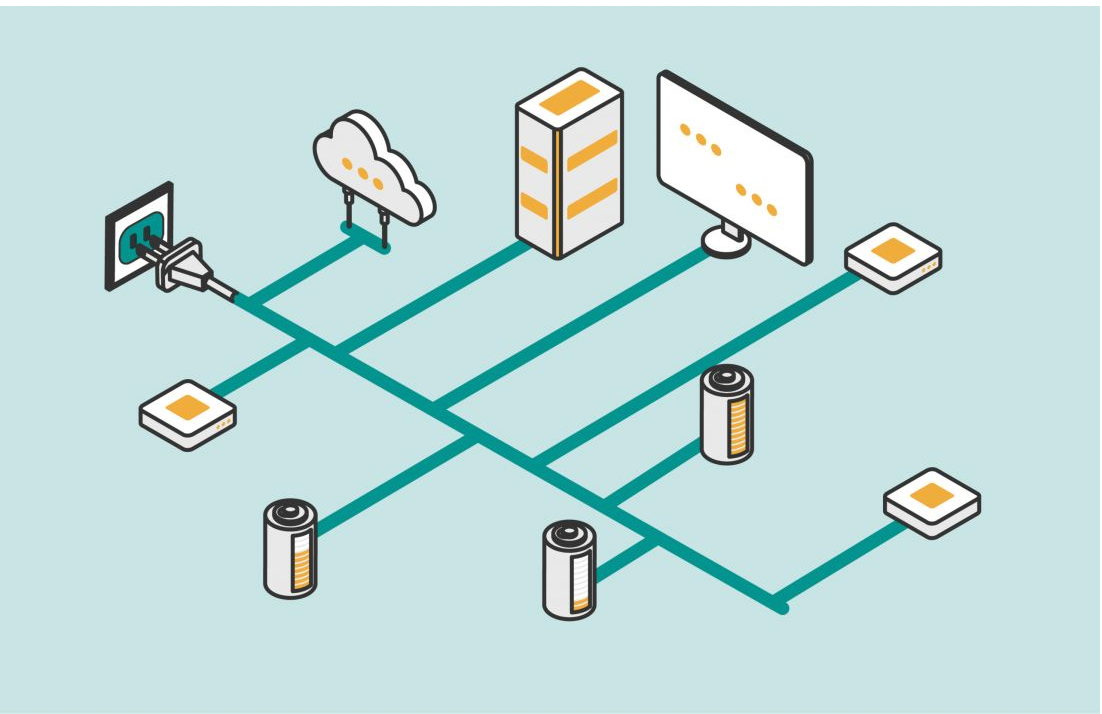

Another solution at MIT is OFA networks or one-for-all networks. This OFA network is meant to be a network that is trained to “support versatile architectural configurations.” Instead of using loads of energy to work individually for these models it uses a general network and uses specific aspects from the general network to support the software. This network helps to cut down on the overall cost of these models.

Though there are concerns over whether this can compromise the accuracy of the system the researchers provided testing on this to see if it was true. It was not and they found that the OFA network had no effect on the AI systems.

With these solutions, it is important to understand our future is not hopeless. Researchers are actively looking at ways to alleviate this issue and by using the correct plans and actions, the innovations of the future can help to better, not harm.