TikTok is an app that needs no introduction. Its name has been plastered on television screens due to the national security concerns raised by President Trump and its active use among teenagers in schools worldwide. The appeal to easy fame and virality has its audience addicted, with about 732 million monthly active users worldwide (DataReportal, 2021). Popular TikTok creators like Charli D’Amelio, Addison Rae, and Noah Beck, have even gone on to become high fashion brand ambassadors, star in films, and appear on the cover of magazines. The connection between most creators and their fame is unsettling; nearly all of them are caucasian.

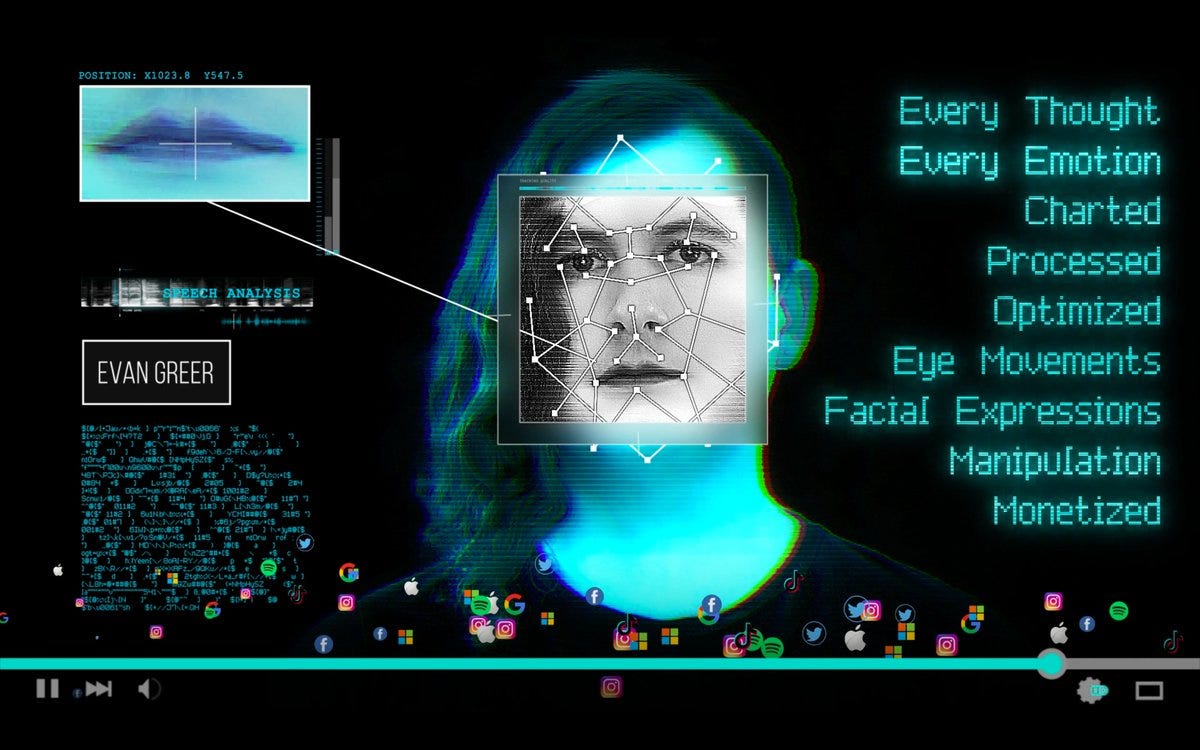

Facial Recognition Technology

Facial Recognition Technology (FRT) is a form of biometric technology that analyzes a person’s facial landmark map, such as the placement of their nose, eyes, and mouth, and compares it with a variety of other landmark maps in order to identify someone. The issue is how the technology misidentifies people, specifically people of color, because of its databank consisting of a majority of Caucasian facial landmark maps. NIST (National Institute of Standards and Technology) conducted a study in 2019 discovering that FRT is 10–100 times more likely to misidentify Asian and Black than Caucasian individuals.

“Middle-aged white men generally benefited from the highest accuracy rates.” -Drew Harwell, The Washington Post.

With this background knowledge in mind, I proposed a question that would later become an extensive research study with surprising results…

Does TikTok use facial recognition technology within its algorithm, and if so, how important is race when it comes to virality?

The Research

After a quick google search, I came across an article by a group of Chinese scientists at the South China University of Technology. This article was a proposition for the use of far more accurate technology that TikTok could implement into its system. It pointed out the failures of TikTok’s current software, and the successes of the one the researchers developed. Not only was facial recognition at play, but it was used to rank users on a scale of 1–5 based on their beauty.

The first page of the research proposal gives a summary of facial beauty prediction (FBP), which assesses a person’s attractiveness the same way facial recognition technology assesses a person’s landmark map for identification. FBP uses the fundamental infrastructure of FRT to rank a person’s beauty. I wanted to see what effect race had on virality, so for three months subjects of differing races (Asian, Caucasian, and Caucasian with Hispanic ethnicity) posted the same style and formatted videos with the same sound, at the same time, on the same day. The only difference between the videos was the subject in front of the camera.

The question shifted to the extent of a race’s virality. Who would be the most popular out of the three?

The Data

After three full months, the subjects’ analytics (their average amount of comments, likes, additional followers) were recorded and headshots were taken to mimic the way FRT creates facial landmark maps. Following a facial dot map (fig. 2) the basic structure of their faces were accurately depicted and displayed beside each other (fig.3–4). Through this, the differing landmark maps were compared along with their profile data.

Subject B was ranked highest in both the amount of likes and views. The startling discovery was not her newfound popularity, but the cause of it. Subject B was Hispanic, with fair skin and a small face. Subject C was Caucasian, with a bigger face and brow. Subject A was Asian, with a larger surface area around her cheeks and brow. Through this, I was able to discover that TikTok does not use FRT and FBP simply based on a person’s genetic makeup, but the composition and coloration of their face and features.

When comparing Subject B to the facial dot map of Charli D’Amelio the similarities were striking (fig. 5).

This evidence anecdotally supports the limited diversity of popular creators, yet it remains to prove why it’s more common to find a “white passing” individual or a person with caucasian features on your “for you” page.

If you need more evidence, don’t worry. Subjects used new accounts and didn’t interact with any of the videos shown on their For You Pages. When scrolling through their For You Pages for five minutes, all three watched only 48 videos, and an average of 41.66% consisted of white or “white passing” individuals.

The Conclusion

This research began with the question of whether or not TikTok used facial recognition technology and the extent that race played into virality. From the evidence found, I was able to answer this. TikTok, in its own way, uses facial recognition technology embedded in facial beauty prediction. Race seems to play an underlying role, however, it is not the ultimate deciding factor. Through my research I was able to discover that TikTok’s algorithm is sensitive, and is incredibly harsh to new users. Many claim your first five videos are the foundation to your career on TikTok, allowing the app to categorize your account based off of the content you produce. If your account is not managed correctly, you will be deemed an unreliable source of traction for the app and you’ll experience what many call a “flop” (TechCrunch). This just goes to show that the app relies on various factors when determining a user’s popularity, and race is definitely on the list.

This raises concerns for the future of AI and social media. Timelines, feeds, and pages already seem like algorithmic projections of unattainable beauty standards and ideals. The continued use and development of such technologies will continue to drive users down a path of insecurities and unconscious bias.